Dev Duniya

Mar 19, 2025

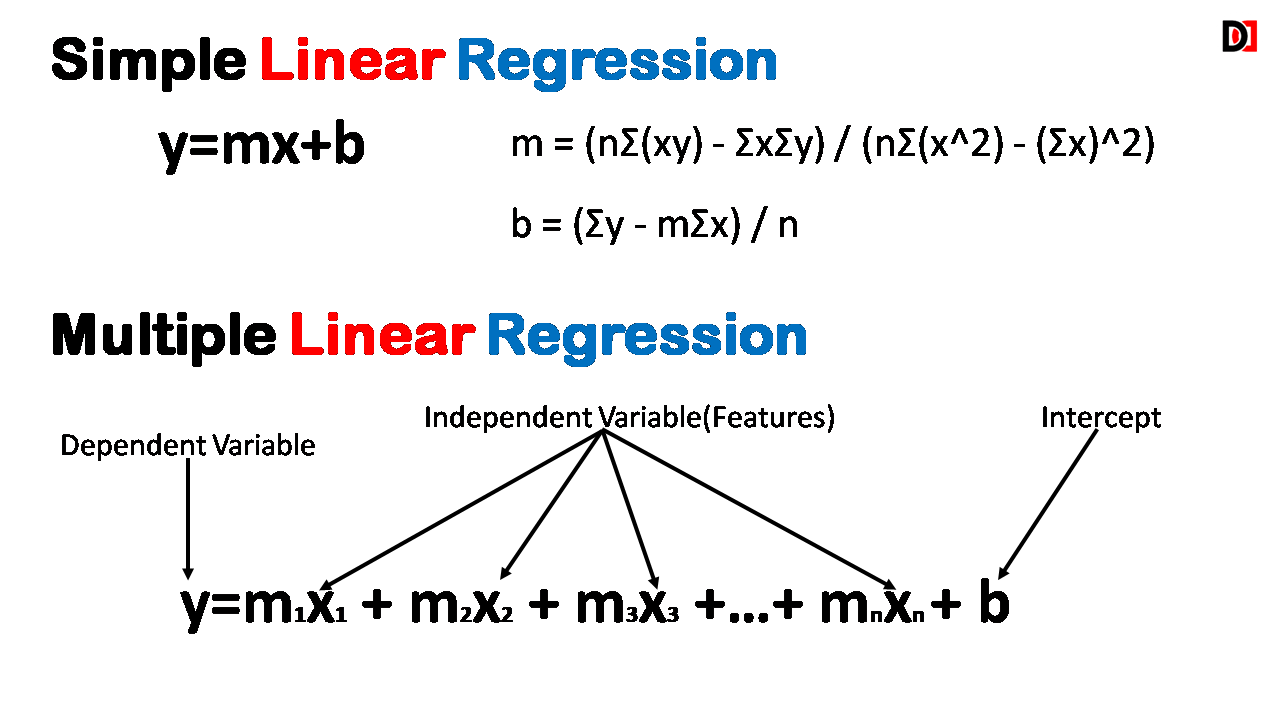

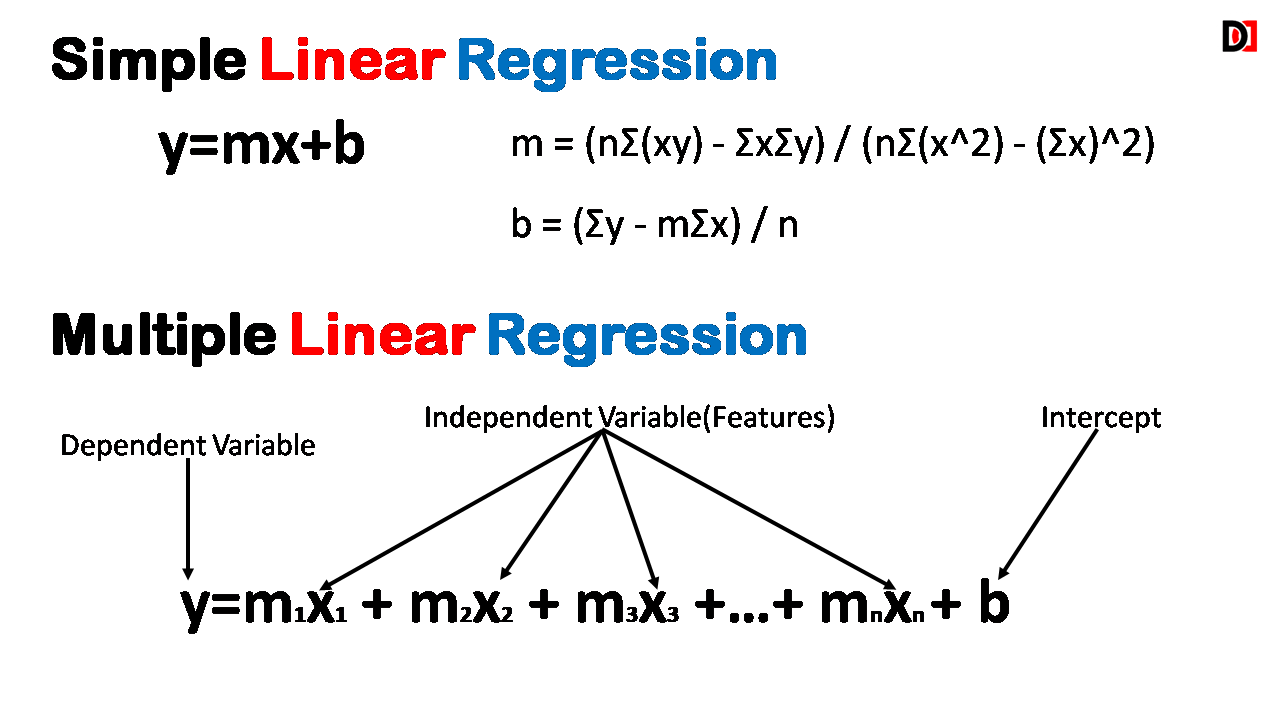

Multiple Linear Regression is an extension of Simple Linear Regression. While Simple Linear Regression models the relationship between one independent variable and one dependent variable, Multiple Linear Regression considers the influence of multiple independent variables on a single dependent variable.

1. Multiple Independent Variables: Instead of a single predictor, we now have multiple factors influencing the dependent variable.

2. Dependent Variable: Remains the same – the variable we are trying to predict.

3. Linear Relationship: The model assumes a linear relationship between the dependent variable and the combined effect of the independent variables.

The equation for Multiple Linear Regression is:

y = b0 + b1x1 + b2x2 + ... + bn*xnwhere:

In Multiple Linear Regression, we are essentially trying to find the best-fitting hyperplane (a multi-dimensional plane) that minimizes the difference between the actual values of the dependent variable and the predicted values.

Let's say we want to predict house prices based on size (square footage), age, and number of bedrooms. We have the following data:

| Size (sq ft) | Age (years) | Bedrooms | Price (in thousands) |

|---|---|---|---|

| 1500 | 10 | 3 | 250 |

| 2000 | 5 | 4 | 300 |

| 1800 | 8 | 3 | 280 |

| 1200 | 20 | 2 | 180 |

| 2500 | 2 | 5 | 400 |

Using scikit-learn in Python:

import numpy as np

from sklearn.linear_model import LinearRegressionX = np.array([[1500, 10, 3],

[2000, 5, 4],

[1800, 8, 3],

[1200, 20, 2],

[2500, 2, 5]]) # Independent variables

y = np.array([250, 300, 280, 180, 400]) # Dependent variable (house price)model = LinearRegression()

model.fit(X, y)new_house = np.array([[1850, 12, 4]]) # New data point

predicted_price = model.predict(new_house)

print("Predicted Price:", predicted_price) This will give you the predicted price of a house with the given characteristics.

Multiple Linear Regression is a powerful technique for modeling the relationship between a dependent variable and multiple independent variables. By understanding its principles and limitations, you can effectively apply this method to various real-world problems.